Chatbot for Reporting Defects

Client: Facilities Management SaaS, B2B

2020

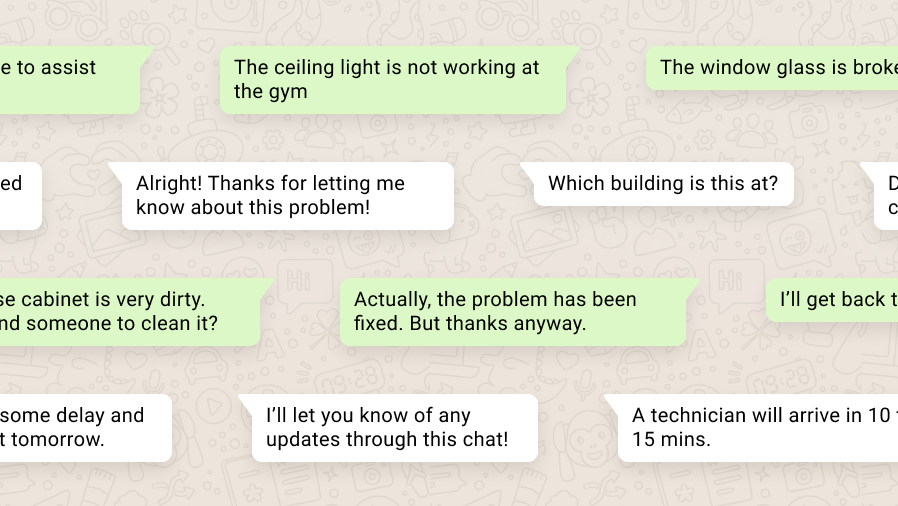

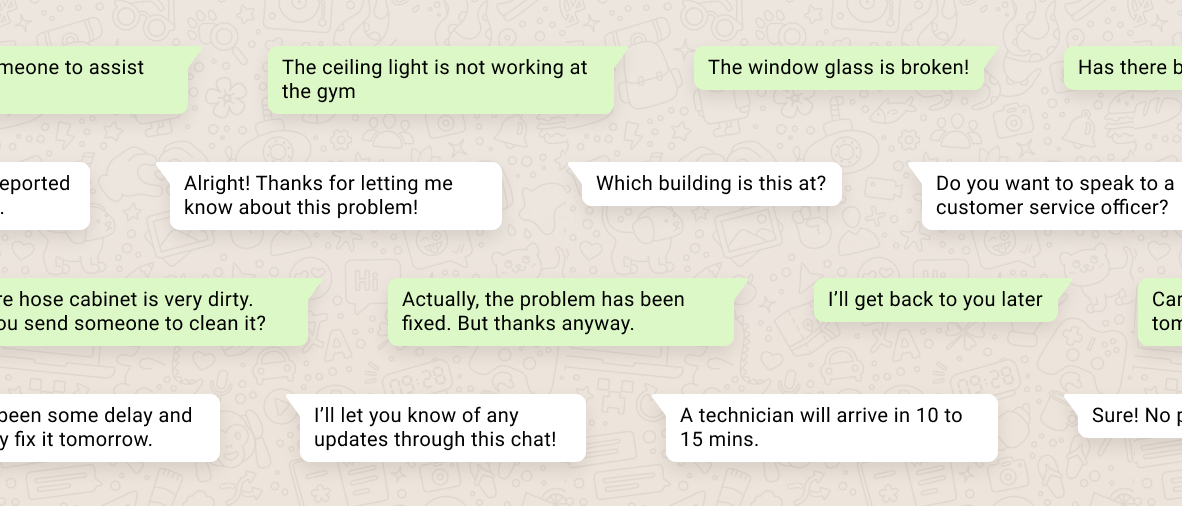

How can we make reporting defects easy and efficient through a platform everyone used everyday, WhatsApp?

How can we keep users informed of any progress made?

Client: Facilities Management SaaS, B2B

2020

How can we make reporting defects easy and efficient through a platform everyone used everyday, WhatsApp?

How can we keep users informed of any progress made?

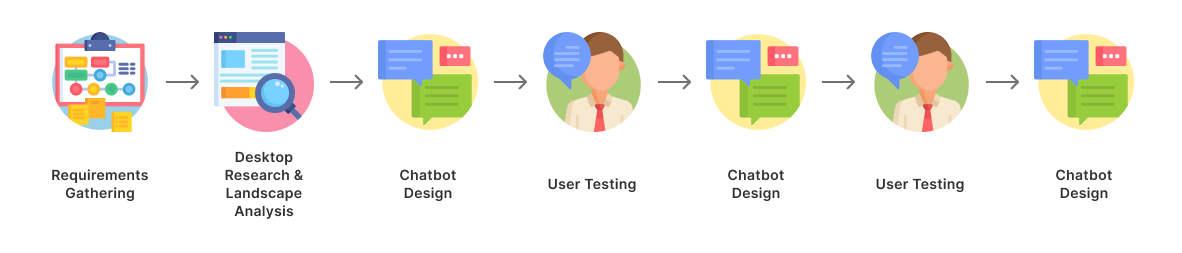

We first conducted a remote journey mapping workshop with the client to better understand the current process, pain points, different user groups and the ideal journey. We also refined our understanding through customer interviews in-person with three of the client's customers.

Reporting defects could already be done through a variety of channels, including a proprietary mobile app. The intention behind creating a chatbot was to make reporting defects easier and more convenient for the users, as well as streamline backend workflows and processing times.

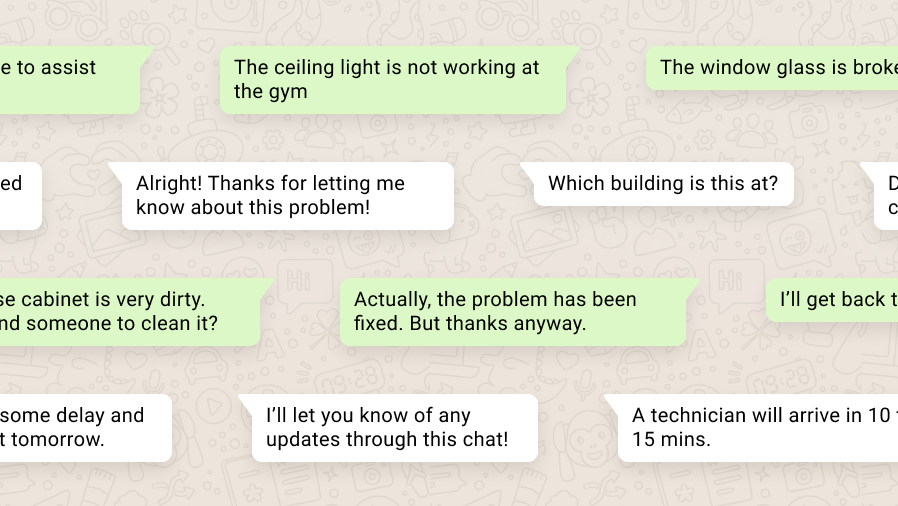

This was our first foray into chatbot and conversation design and we did extensive desktop research to understand the best practices regarding chatbot design.

There are two key categories of chatbots: Rule-based chatbots and NLP-based chatbots. We decided to use NLP to simplify what could otherwise be a lengthy reporting process. Using WhatsApp to build a chatbot also meant that we could not rely on button-inputs and had to pay more attention to conversation design.

While there are not many live WhatsApp chatbots based on NLP, we were able to gather best practices from other references, some of which include:

We also had to learn how Dialogflow, the platform which would power our NLP chatbot, worked. We learnt NLP is not magic, and is still structured through certain rules and logic, albeit ones different from conventional chatbots. In order to design a chatbot that would be technically feasible, we had to equip ourselves with an understanding of how intents, entities, contexts and slot-filling worked in Dialogflow.

With a better understanding of the business requirements and technical possibilities, we designed the chatbot's conversation flow and copy.

We tested the design with a total of 13 end users from 2 user groups across 2 rounds of testing.

Using the Wizard of Oz method, we simulated how the chatbot was intended to work according to the end user's input and left the end user to respond freely to the prompts.

We learnt from the sessions that although we had tried our best to keep things simple, the conversation was still too lengthy, wordy and tedious. A lot of what we thought was good-to-have information bogged down the conversation and the challenge for us was now to not overdesign.

The testing also allowed us to better understand how end users would interact with the chatbot, and revealed scenarios that we had not designed for. With this information, we could better design the NLP logic and refine the tone of the copy so that the experience would be a stress-free one.

Lastly, we discovered that the chatbot flow was not simply contained in a single user's perspective as we had thought. Different parts of the chat experience we had designed were more relevant for different types of users depending on their role and their seniority level. We refined the user groups into smaller user groups based on this, and introduced more conditional logic into the chatbot to send different messages to different users.

After refining the design with insights from the testing session, the design achieved a 100% success rate for key tasks and a 100% satisfaction rate in the last round of testing.

The client also found the insights and feedback from the customer interviews and user testing sessions extremely helpful in charting their product roadmap and prioritising future features.

The chatbot is currently being developed by the client before being piloted with their customers.